Apple Unveils M4 Chip via iPad Pro

Focuses on Enhanced AI-Supported 'NPU' Performance

Qualcomm Announces Rivalry with Faster NPU Performance Chip

Apple has launched an aggressive move to shed the stigma of falling behind in the artificial intelligence (AI) era. The transition to AI, initially expected to begin with the launch of the iPhone 16 this fall, has been accelerated by four months, intensifying the competition in AI chip development. At the center of this is the NPU (Neural Processing Unit).

![[Apple Shockwave] Monster Chip Calculates 38 Trillion per Second... Now the Neural Network Competition](https://cphoto.asiae.co.kr/listimglink/1/2024051011020367950_1715306523.jpg) Internal view of the M4 chip unveiled by Apple. The brightly highlighted area is the Neural Engine responsible for AI functions. Photo by Apple

Internal view of the M4 chip unveiled by Apple. The brightly highlighted area is the Neural Engine responsible for AI functions. Photo by Apple

On the 7th (local time), Apple unveiled the new iPad Pro along with the surprise introduction of the M4 chip. This was a groundbreaking move that exceeded expectations. Apple's action is unprecedented not only in the semiconductor industry but also across the entire IT sector. The M4 was introduced just six months after the M3 chip, which is rare since new chips are seldom released within a year. Apple previously launched the M1 in 2020, which caused a major stir in the semiconductor field, followed by the M2 in 2022 and the M3 in 2023. The A chips for iPhones are also updated annually. Replacing the M3 with the M4 in just six months is interpreted as Apple completing chip-level preparations for the AI era in advance.

This analysis stems from Apple's active promotion of the Neural Engine (NPU) responsible for AI functions in the M4. The competition is no longer about CPU processing speed but about the speed of NPUs.

Apple describes the M4 as the most powerful Neural Engine ever. The M4 boasts a computing power of approximately 38 trillion operations per second, slightly surpassing the Neural Engine in the A17 Pro chip used in the iPhone 15 Pro. It is expected that the Neural Engine performance of the A18 chip, to be introduced this fall, will be equal to or higher than that of the M4. The A17 Pro's Neural Engine performed 35 trillion operations per second, about twice as fast as the A16's 17 trillion. The improvement in NPU performance signals the foundation for Apple's full-fledged on-device AI, which will debut with the iPhone 16 in the second half of this year.

Apple chose the Neural Engine in the A11 Bionic chip as the internal comparison target for the M4 Neural Engine. According to Apple, the M4's Neural Engine is 60 times faster than that of the A11. The A11 was used in the iPhone X, the 10th-anniversary model released in 2017, and was the first Apple chip to incorporate a Neural Engine. It was also the first case in the semiconductor industry to use a Neural Engine at the system-on-chip (SoC) level. At that time, the IT industry predicted that Apple would focus on on-device AI operating directly on the device itself, unlike companies like Google that were preparing for cloud-based AI.

Apple also claims that the M4 outperforms the Neural Processing Units (NPUs) of any existing AI PC. This is true when limited to PCs. Intel Core Ultra CPUs have NPU performance of 11 trillion operations, and AMD Ryzen 8000/Ryzen Pro 9000 processors' NPUs reach only 16 trillion operations.

Apple may have launched the M4 preemptively to counter a 'dark horse' competitor: Qualcomm's 'Snapdragon X Elite.' Designed by former Apple engineers, the Snapdragon X Elite is a chip Qualcomm has been developing to enter the ARM-based PC market led by Apple. The Snapdragon X Elite's NPU processing speed is 45 trillion operations per second. PCs using this chip, such as those from Samsung Electronics, are expected to be released soon. Intel and AMD have also announced plans for significant improvements in NPU performance.

Apple has gradually enhanced AI features in the iPhone and iPad, including face recognition to unlock the screen, photo correction, and Apple Music, applying AI features ahead of competing smartphone manufacturers. However, the emergence of generative AI like ChatGPT changed the landscape. Apple, focusing on cautious on-device AI, lost ground to the larger trend.

Although the M4 has secured Neural Engine performance, Apple has yet to release AI features that satisfy consumers' raised expectations following ChatGPT. Features like real-time translation, praised in the Galaxy S24, have not yet appeared. Apple is promoting features such as real-time subtitles, visual information search that identifies subjects in videos or photos, separating backgrounds and subjects in videos, and automatically generating sheet music in real-time by listening to piano performances. Of course, this situation may change with the upcoming Apple WWDC 2024 event next month.

Apple's chip strategy shift was first detected with the A17 Pro chip used in the iPhone 15 Pro last year. Apple formed a new line by attaching 'Pro' instead of 'Bionic' to the A chip for iPhones. It remains unclear whether the iPhone 16 launching this year will feature the A17 Bionic or continue using the A17 Pro. The status of the high-end M3 chips?Pro, Max, and Ultra?has also become confusing with the arrival of the M4.

Apple is also making various attempts to leverage its strong semiconductor design capabilities to respond to the generative AI era. The Wall Street Journal reported that Apple is developing its own AI chips for data centers, and Bloomberg reported that Apple is building its own AI servers using the M2 Ultra chip. This is understood as a plan to dramatically enhance AI responsiveness by utilizing already secured high-performance chips and new AI chips.

This aligns with the strategy of Nvidia, a leading AI semiconductor company. Nvidia is reportedly developing ARM-based CPUs separately from its AI graphics processing units (GPUs). Nvidia and Apple are each advancing toward their strengths based on their respective advantages. Samsung has also enhanced the NPU functions of the Exynos 2400 chip used in the Galaxy S24. Samsung explains that there is little difference in NP performance between Qualcomm Snapdragon and Exynos.

Apple specialist media Culture of Mac predicts that if Apple's plans proceed smoothly, users of iPhones, iPads, and Mac computers will be able to experience both on-device AI and cloud-based AI.

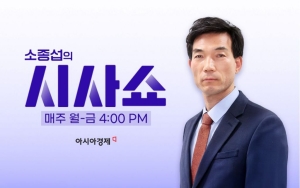

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.

![[Apple Shockwave] Monster Chip Calculates 38 Trillion per Second... Now the Neural Network Competition](https://cphoto.asiae.co.kr/listimglink/1/2024051211073368997_1715479653.jpg)

![[Apple Shockwave] Monster Chip Calculates 38 Trillion per Second... Now the Neural Network Competition](https://cphoto.asiae.co.kr/listimglink/1/2024051211393569017_1715481575.png)