Detecting and Blurring Exposed Photos in Minors' DMs

Action Taken Amid Lawsuit Pressure from 41 U.S. State Governments and Others

Instagram, facing lawsuits in the United States for negatively impacting minors' mental health, has introduced measures to strengthen youth protection through artificial intelligence (AI).

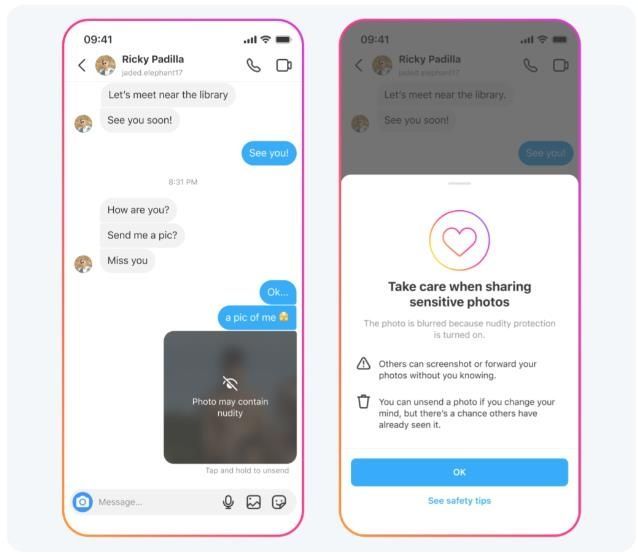

On the 11th (local time), Meta Platforms (Meta), Instagram's parent company, announced that it is developing new features to protect teenagers from excessive exposure to explicit photos, sexual exploitation crimes, and to make interactions between potential offenders and minors more difficult. Meta revealed that it is testing an AI-based tool that automatically detects and blurs explicit photos sent to minors through Instagram's Direct Message (DM) system.

For users under 18, images containing nudity are automatically blurred on a warning screen by default. Recipients can choose whether or not to view the image without fully exposing themselves to it. Adults receive notifications encouraging them to apply this feature. Additionally, Meta offers options to block senders of such images and report the chat. A message is also displayed advising users that they do not have to respond to such approaches. Meta explained that it is also developing technology to help identify accounts potentially involved in sexual exploitation scams.

Earlier, in January, Meta announced plans to automatically block harmful content to prevent minors from being exposed to it. This measure came amid increasing pressure from authorities, including lawsuits filed by 41 U.S. state governments in October last year against Meta, claiming that Instagram and others are designed to be excessively addictive, harming minors' mental health.

© The Asia Business Daily(www.asiae.co.kr). All rights reserved.